Here is a poll about what are the concerns for people about privacy.

Exploring Relationships Between Interaction Attributes and Experience

Leave a reply

Eva Lenz, Sarah Diefenbach and Marc Hassenzahl proposed the Interaction Vocabulary, obtained after evaluating different interactions through the why-, what- and how- framework (a simplistic form of Goal Oriented Analysis).

| Experience | Interaction Attributes | Experience | |

| Esteem, focus on the interaction itself, significance of the present moment, relaxing, calming, accuracy, care, appreciation of interaction/product | slow | fast | Animating, stimulating, activating, efficiency, focus on instrumental goal of interaction, expression of willpower. |

| Ritualization, every step is meaningful, rewarding, emphasis on progress and advance of the process, approaching a goal step by step, clear structure, being guided through the process | stepwise | fluent | Autonomy, continuous influence, power and right to change what’s happening at anytime of the process, no barriers, fluent integration in running process, spurring instead of interrupting |

| Instant feedback makes own effect experiential, competence, feeling of own impact creates a feeling of security, you see what you do, makes immediate correction possible, nothing in between, you experience what you do, increase of competence, the instant feedback creates a feeling of recognition. | instant | delayed | Emphasizing the moment of interaction, creating awareness. Centering on the interaction itself rather than its instrumental effect. |

| Influence by intuition, control | uniform | diverging | Unusual, unnatural, amplified, grasping for attention |

| Creates feeling of security | constant | inconstant | Liveliness, suspense, you can’t adapt yourself to it, unreliable, chance as an idea generator |

| Uncertainty, ambiguity, magic, handing over the responsibility (the interaction happens somewhere else), you don’t put much of yourself in it m | mediated | direct | Significance of your own doing, face-to-face contact, experiencing affinity, self-made, close relation to the product, feeling of constant control |

| not feeling as a part of it, feeling of distance | spatial separation | spatial proximity | Personal contact, feeling of relatedness, safety (you know exactly what you did), being a part of it, intensive examination of details |

| Deeper analysis is needed, room for variation = room for competence, room for new ideas, exploration | approximate | precise | Safety, no changes = room to concentrate on something else/competence in other fields, exact idea of result, always exact the same |

| Carefulness, awareness, appreciation, making a relationship with the thing (being gentle with it), being a part of it, revaluation of the action, raises the quality, allows to perform a loving gesture | gentle | powerful | Archaic interaction, sign of strength, power, effectiveness |

| Low challenge, no room to experience competence, no room for improvement, becomes side issue, doesn’t matter | incidental | targeted | Appreciation, significance of interaction, worthy of attention, high challenge, high concentration, room for competence |

| Conscious of the significance of your own doing, assurance, security, goal-mode, seeing what is going on, expressive, very easy | apparent | covered | magic, excitement, exploration, action-mode, witchcraft, deeply impress somebody |

Sources:

E. Lenz, S. Diefenbach, and M. Hassenzahl, “Exploring relationships between interaction attributes and experience,” in Proceedings of the 6th International Conference on Designing Pleasurable Products and Interfaces, 2013, pp. 126–135.Proportionality Design Method

The principle of Data Quality from the Fair Information Practices insinuates that the information that is obtained from the users should be applied to their benefit:

| “Personal data should be relevant to the purposes for which they are to be used, and, to the extent necessary for those purposes, should be accurate, complete and kept up-to-date.” |

Giovanni Iachello and Gregory D. Abowd use this as a starting point and elaborate the principle of proportionality:

| “Any application, system, tool or process should balance its utility with the rights to privacy (personal, informational, etc.) of the involved individuals” |

Based on this principle, they propose the Proportionality design method:

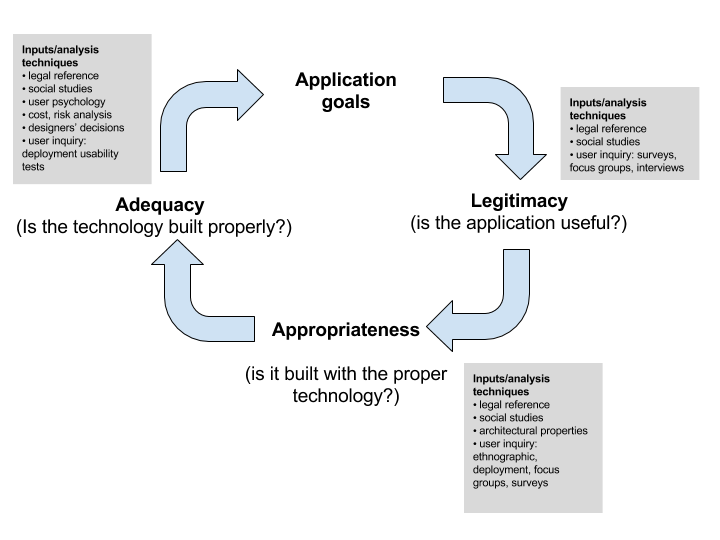

During the whole development cycle of the application, the different parts need to verify the legitimacy, appropriateness and adequacy of the application:

- Legitimacy: Verify that the application is useful to the user. What is the function that the application cover?

- Appropriateness:Analyse if the alternative implementations with the different technologies satisfy the goal of the application without supposing a risk for the privacy of the users?

- Adequacy: Analyse if the different alternative technologies are correctly implemented.

Sources:

G. Iachello and G. D. Abowd, “Privacy and proportionality: adapting legal evaluation techniques to inform design in ubiquitous computing,” in Proceedings of the SIGCHI conference on Human factors in computing systems, 2005, pp. 91–100.