This content has been moved to The Privacy Toolkit: The Seven Types of Privacy

Monthly Archives: December 2017

Privacy Facets (PriF)

Keerthi Thomas, Arosha K. Bandara, Blaine A. Price1 and Bashar Nuseibeh propose a process (Requirement distillation) and a framework (PriF) as a way to capture the privacy related requirements for a mobile application development.

Requirements distillation process

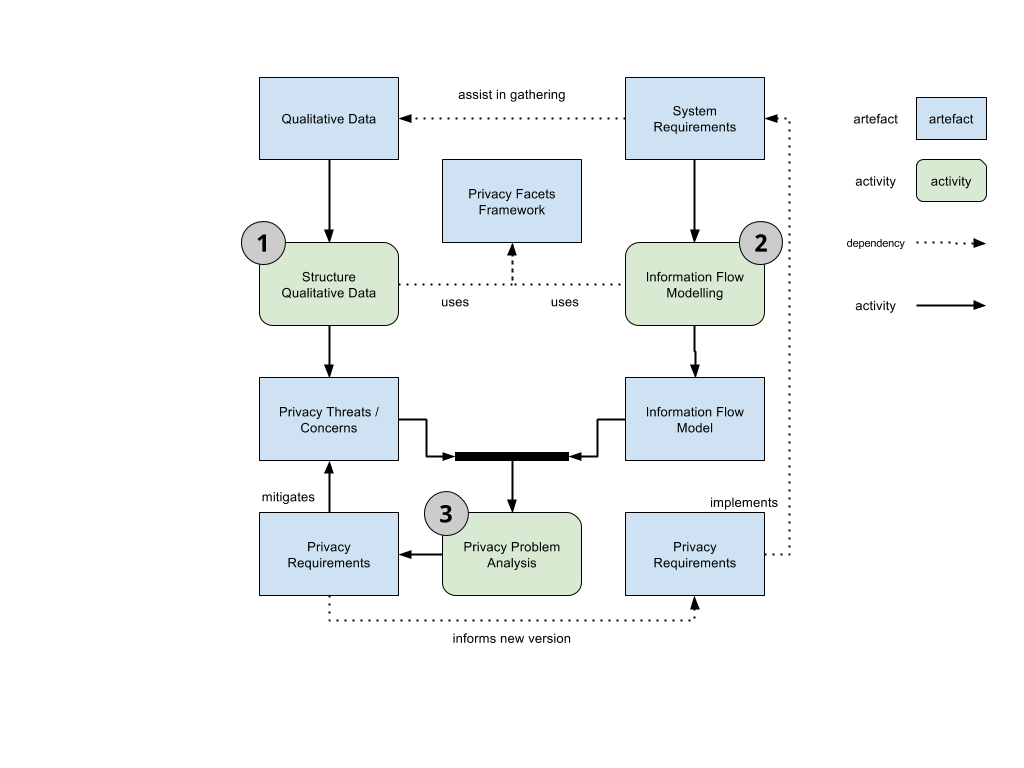

The requirements distillation process consists of three main phases: “Structuring of the Qualitative Data”, “Information Flow Modelling” and “Privacy Problem Analysis”.

Structuring Qualitative Data: Use Privacy Facets (PriF) framework to structure the qualitative data. The outcome is a set of predefined codes adapted to the identification of privacy-sensitive contexts. The result of completing this phase is a set of Privacy or Threats Concerns from the users.

Information Flow Modeling: In the second phase, the problem models of information-flows are developed. That is done based on the information-flow problem patterns, which are provided in the PriF framework. These problem models capture the way the information is created and disseminated to other users.

The privacy problem analysis: To elaborate a list of the privacy requirements, the privacy-sensitive context and its privacy threats or concerns are analysed with the information-flow models.

Privacy Facets

The Privacy Facets is a framework that provides:

- analytical tools such as thematic codes, heuristics, facet questions and extraction rules to structure qualitative data

- information-flow problem patterns and privacy arguments language to model privacy requirements.

To obtain those assets, the system analyst should structure the qualitative data of the system from the first phase of the process by using some heuristic based categories, for example:

- Negative Behaviour Patterns (NBP): Situations in which the user chooses not to use an application because of privacy concerns.

- Negative Emotional Indicators (NEI): These are keywords that indicate that the user might have some concerns about the privacy when using the application.

Approximate Information Flows (AIF)

The content has been moved to:

https://www.privacy-toolkit.net/approximate-information-flows-aif/